Just as we have all, more or less, boarded the AI train, the EU has offered a timely reminder, in its recent passing of the AI Act, that whilst undoubtedly a force for good when used well, the AI landscape needs taming somewhat as we humans catch up with the exponential proliferation of AI throughout all areas of life.

-

What is it?

The AI Act, or to give it it’s official name (pausing for breath): Regulation (EU) 2024/1689 Of The European Parliament And Of The Council of 13 June 2024 laying down harmonised rules on artificial intelligence and amending Regulations (EC) No 300/2008, (EU) No 167/2013, (EU) No 168/2013, (EU) 2018/858, (EU) 2018/1139 and (EU) 2019/2144 and Directives 2014/90/EU, (EU) 2016/797 and (EU) 2020/1828 (Artificial Intelligence Act), creates one of the first and most comprehensive regulatory frameworks for artificial intelligence in the world.

Through this ambitious framework, the EU hopes to promote the adoption of AI systems across the EU that are:

- – Safe

- – Transparent

- – Traceable

- – Sustainable

- – Non-discriminatory

It is worth highlighting at the outset that the vast majority of AI systems currently used in the EU fall within limited or minimal risk categories and may continue to be used relatively freely…but before we sit back and ask ChatGPT to devise a recipe for supper, please read on.

-

Limited risk

‘Limited risk’ AI systems are those that do not pose a significant threat to safety, fundamental rights or consumer protection but could potentially still have some level of adverse impact.

The key regulatory driver for limited risk AI systems, is transparency, so that users are aware when they are interacting with an AI system, for example, when using chatbots or through watermarks on AI generated content.

In the case of “deep fakes”, AI deployers (essentially the users of an AI system) are also under an obligation to clearly disclose that content has been artificially generated. Where AI content forms part of an artistic work (and which is clearly an artistic work), the disclosure obligation is limited to disclosing the existence of the AI content in a way that does not hamper the display or enjoyment of the work.

-

GPAIs – ChatGPT and other LLMs

To add a brief note on General Purpose AI models, or GPAIs, these are not in themselves an AI system (although many AI systems will incorporate one or more GPAIs) but are subject to certain regulatory requirements given their complexity, reliance on self-supervision and risk to fundamental rights, health and safety.

GPAIs, such as large language models like ChatGPT, can process a variety of information inputs and can be applied to a wide range of different tasks across a range of fields. They are also frequently used as a pre-trained foundation for more specific AI systems.

Under the AI Act, providers of GPAIs must:

- – Create a pack of technical information, including details on training and testing, to be provided to relevant regulators on request

- – Provide certain technical information to providers of AI systems incorporating the GPAI, along with any information to enable the provider to have a good understanding of the capabilities/limitations of the GPAI

- – Have a copyright policy in place to ensure that EU copyright is respected by the GPAI (many will be aware of some of the murky copyright issues raised, for example the legal claims issued by Getty Images alleging that Stability AI scraped millions of Getty images without permission) and

- – Publish a summary regarding the content of the data used to train the model (the EU regulator will be publishing a template for use by GPAIs in due course).

-

High risk and banned AI systems

High risk AI systems will be subject to the highest burden of regulation under the AI Act due to the potential high risk posed to the health and safety or fundamental rights of EU citizens. This will include (subject to some narrow exceptions) AI systems intended for use in:

- – Biometric identification or categorisation and emotion recognition

- – Critical infrastructure (for example, managing road traffic)

- – Education and training

- – Employment related decision making (for example, performance reviews)

- – Managing access to public services, insurance and credit

- – Law enforcement

- – Migration and border control

- – Administration of justice and democratic process

Under the AI Act, high risk AI systems will require appropriate risk management systems and will be required to comply with minimum criteria in respect of security, training, data collection and bias. Providers of high risk AI systems will be required to provide certain technical information and the system itself must create an ongoing event log, including details of the reference database against which input data is checked.

Although the majority of obligations fall upon the providers of the AI system, users or “deployers” of high risk AI systems are also subject to obligations to ensure the proper use of the system and provide an important element of human oversight.

The most intrusive AI systems of all will be banned by the EU from February 2025 including AI systems (subject to specific exceptions):

- – That distort behaviour through subliminal, manipulative or deceptive techniques or through exploiting vulnerabilities

- – That classify individuals based on their behaviour, socio-economic status or personal characteristics

- – That categorise and identify individuals using biometrics, such as untargeted scraping of facial images

-

What about Brexit, does the EU still matter?

Despite Brexit, the AI Act will be relevant to all those with establishments in the EU or where the AI generated output is to be used in the EU, in addition to those who place AI systems or GPAIs on the market in the EU. This may sound all too familiar to UK SMEs grappling with GDPR compliance post Brexit.

Enforcement continues to draw parallels with the GDPR regime: for SMEs who fail to comply with the EU AI Act, fines start at a hefty EUR 7.5 million or 1 percent of worldwide annual turnover (whichever is the lower) for providing incomplete or inaccurate information to the regulator and increase up to maximum financial penalties of EUR 35 million or 7 percent of worldwide annual turnover (whichever is the lower) for the most serious breaches.

-

What now?

There are a couple of key dates on the immediate horizon:

- – 2 Feb 2025 – the ban on most intrusive AI systems comes into effect

- – 2 August 2025 – obligations in respect of GPAIs come into force, although those GPAIs already on the market by 2 August 2025 will have an additional 2 years to comply (as a result of which, we may well see a flurry of new product releases in the next 10 months or so).

We are already starting to see large customers flow down AI Act compliance obligations through to their supplier contracts (with little regard as to whether or not the supplier is actually providing an AI system).

As a starting point for SMEs, start to look at and keep track of the AI used across the business, the purposes it is used for and undertake a risk assessment. Most likely, current AI will be a limited risk AI system or GPAI. Even though the EU is yet to clarify exactly what information will be required to be shared under the AI Act, when looking at new contracts with AI providers (or renewing existing contracts), start to include obligations on AI providers to:

- – Ensure that IP rights in the AI output are transferred to the customer

- – Require them to proactively put together the relevant technical information and make it available where appropriate

- – Provide a summary of how the AI is trained (and ensure that customer data, and particularly personal data, is not used for training purposes) and

- – Comply with the AI Act more generally as and when the various provisions come into force

The AI arena looks set to be in for a shake up no smaller than GDPR. Please get in touch if you’d like some help

Written by Laura Perkins

Principal at My Inhouse Lawyer

One of our values (Growth) is, in many ways, all about cultivating a growth mindset. We are passionate about learning, improving and evolving. We learn from each other, use the best know-how tools in the market and constantly look for ways to simplify. Lawskool is our way of sharing with you. It isn’t intended to be legal advice, rather to enlighten you to make smart business decisions day to day with the benefit of some of our insight. We hope you enjoy the experience. There are some really good ideas and tips coming from some of the best inhouse lawyers. Easy to read and practical. If there’s something you’d like us to write about or some feedback you wish to share, feel free to drop us a note. Equally, if it’s legal advice you’re after, then just give us a call on 0207 939 3959.

Like what you see? Book a discovery call

How it works

1

You

It starts with a conversation about you. What you want and the experience you’re looking for

2

Us

We design something that works for you whether it’s monthly, flex, solo, multi-team or includes legal tech

3

Together

We use Workplans to map out the work to be done and when. We are responsive and transparent

Like to know more? Book a discovery call

Freedom to choose & change

MONTHLY

A responsive inhouse experience delivered via a rolling monthly engagement that can be scaled up or down by you. Monthly Workplans capture scope, timings and budget for transparency and control

FLEX

A more reactive yet still responsive inhouse experience for legal and compliance needs as they arise. Our Workplans capture scope, timings and budget putting you in control

PROJECT

For those one-off projects such as M&A or compliance yet delivered the My Inhouse Lawyer way. We agree scope, timings and budget before each piece of work begins

Ready to get started? Book a discovery call

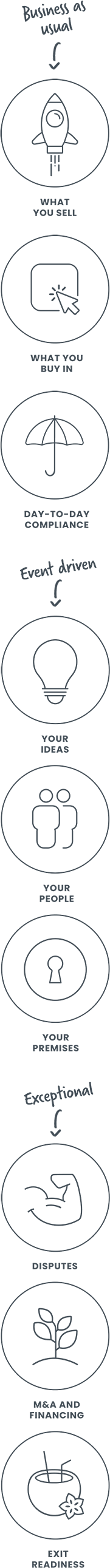

How we can help