As SMEs think about using AI to grow, we’re beginning to be asked about the legal issues.

As we said recently: law and regulation are still playing catch-up with the technology, which is moving at lightning speed [Read our article, What to Include in your AI Policy].

We can’t predict, but we can say what the lawmakers are thinking about at a high level, which sets the direction.

![]()

Where the EU leads, the UK will follow

The UK government is still discussing AI in general terms. There’s no sign of any legislation, but legislation may very well follow the EU.

The EU

The EU is planning:

- an Act to prevent risks posed by AI from arising; and

- an AI Liability Directive, which will provide remedies for those harmed by AI.

The Act will define AI, and introduce concepts like providers (systems developers and marketers) and deployers (systems users, including end users). It will prohibit certain practices, including the following relevant to SMEs:

- AI using subliminal techniques aimed at (or actually) materially distorting a person’s behaviour in a way that causes or is reasonably likely to cause harm.

- AI exploiting vulnerabilities of specific groups (such as age, disability or economic situation) again aimed at (or actually) materially distorting a person’s behaviour in a way that causes or is reasonably likely to cause harm.

- AI which, in a particular way, creates a social score for a person leading to certain types of detrimental or unfavourable treatment.

Unsurprisingly, some high risk AI will have to have a risk management system, such as (relevant to SMEs): remote biometric identification, educational and vocational use, some employment and recruitment uses.

And the penalties: the EU is known for severe fines and this will be no exception. For using prohibited practices, the higher of 6% of turnover, or €30m. for other breaches: the higher of 4% of turnover, or €20m. For providing incorrect, incomplete or misleading information to the authorities: the higher of 2% of turnover, or €10m.

However, the killer point is the change in the approach to AI-related disputes. The thinking is that it will be extremely hard for someone concerned they have been injured by AI to establish liability, as the inner workings of AI are extremely complex. So, the idea is that if someone makes such a claim based on being damaged by AI, they don’t have to prove it. It will be assumed that the AI caused the harm, unless proved otherwise, and it will be for the AI provider / deployer to prove that it wasn’t the AI that caused the harm.

The law is already clear about two common misperceptions (coming from the “I Robot fallacy”, that regards robots and other AI systems as human, or quasi-human). AI does not have a separate legal personality: its operator does. So, AI cannot be an agent with its own legal rights.

Some UK specifics

Agency law: as above, it’s clear that AI systems cannot be agents acting for their principals. An AI system is merely goods, intellectual property rights and data belonging to the system’s owner and provided as a licence or a service to users.

Data protection (GDPR): AI does not get any special treatment, but is a priority area for the ICO, which regards AI as distinct in five ways from ordinary controlling/processing: (i) use of algorithms; (ii) opacity of processing; (iii) tendency to collect all the data; (iv) repurposing of data; and (v) use of new types of data. The ICO has published a handful of useful papers for further reading.

AI and contract law: there are potential issues round smart contracts (there is a risk that in a chain of blockchain enabled contracts, the contractual link becomes broker, for example).

AI and intellectual property: there are issues round who owns copyright in AI-created material (as not being the author’s creation); also round trademark infringement (public perceptions of a trademark may be a less important factor if purchasing is widely performed by AI).

Tort: This is thought likely to see the most significant AI-influenced legal developments. Product liability will of course be relevant for autonomous vehicles, robots and other mobile AI-enabled or autonomous systems, and breach of statutory duty may also apply depending on the regulatory context.

In terms of negligence, a question might arise for example about a doctor who chose not to use an AI diagnostic tool.

In nuisance and negligence, it’s thought that there will be issues if (for example) an autonomous vehicle runs amok – the concept being that if one brings something on to one’s land and it escapes, the owner is liable. It’s been applied to water, electricity, vehicles – and cattle.

Back with negligence, the traditional principles-based approaches will still have relevance, and legislators could draw on a wide variety of sources. The law has been dealing with issues relating to sentient and tame (or not so tame) non-human beings for a long long time. It’s perhaps not too far-fetched to conjecture that the liability principles set out in (for example) the Animals Act 1971 relating to damage caused by animals might appeal to legislators looking to establish liability for material damage caused by a machine run by AI.

It may of course be lowering for AI proponents who see their works as among the highest examples of man’s creativity that the law might yet treat their creations as merely bovine in nature. Nevertheless, like livestock and zoo keepers, AI keepers (providers or deployers) should ensure that they have adequate insurance against negligence and other tort claims arising from their charges.

We at MIL are increasingly asked questions by SMEs about the legal aspects of AI. Do get in touch if you wish to discuss an issue. When it comes to a technology that’s as new to most of us as this is, every question, however basic, is going to be sensible.

Written by James McLeod

Principal at My Inhouse Lawyer

One of our values (Growth) is, in many ways, all about cultivating a growth mindset. We are passionate about learning, improving and evolving. We learn from each other, use the best know-how tools in the market and constantly look for ways to simplify. Lawskool is our way of sharing with you. It isn’t intended to be legal advice, rather to enlighten you to make smart business decisions day to day with the benefit of some of our insight. We hope you enjoy the experience. There are some really good ideas and tips coming from some of the best inhouse lawyers. Easy to read and practical. If there’s something you’d like us to write about or some feedback you wish to share, feel free to drop us a note. Equally, if it’s legal advice you’re after, then just give us a call on 0207 939 3959.

Want to know more ? Book a discovery call

How it works

1

You

It starts with a conversation about you. What you want and the experience you’re looking for

2

Us

We design something that works for you whether it’s monthly, flex, solo, multi-team or includes legal tech

3

Together

We use Workplans to map out the work to be done and when. We are responsive and transparent

Like to know more? Book a discovery call

Freedom to choose & change

MONTHLY

A responsive inhouse experience delivered via a rolling monthly engagement that can be scaled up or down by you. Monthly Workplans capture scope, timings and budget for transparency and control

FLEX

A more reactive yet still responsive inhouse experience for legal and compliance needs as they arise. Our Workplans capture scope, timings and budget putting you in control

PROJECT

For those one-off projects such as M&A or compliance yet delivered the My Inhouse Lawyer way. We agree scope, timings and budget before each piece of work begins

Ready to get started? Book a discovery call

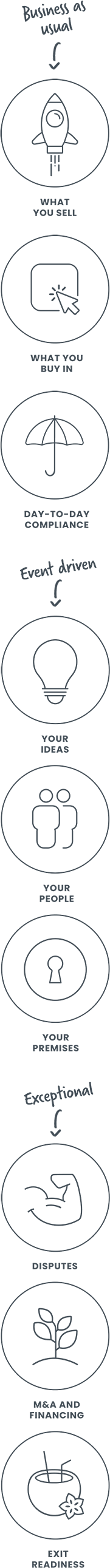

How we can help